Stop Silent Failures: n8n Error Handling System

Contents

Automation workflows in n8n can silently fail, causing costly data loss and unexpected downtime. These silent failures often go unnoticed until manual firefighting begins, costing time and reliability. Traditional error handling approaches that rely solely on notifications are insufficient to guarantee production-grade resilience.

To address this challenge, the ‘Mission Control’ architecture enables you to build robust, self-healing workflows in n8n. This comprehensive system combines node-level retries, centralized error catching workflows, and advanced patterns like circuit breakers and dead letter queues, providing a reliable foundation for resilient automation.

By following this guide, you will gain practical skills to implement n8n error handling strategies with copy-pasteable JSON templates, detailed workflow setups, and integration with enterprise-grade observability tools for monitoring and alerting.

Key Takeaway

n8n error handling allows you to build resilient workflows that recover from failures automatically. Centralized error workflows detect and log silent failures that can stop your automation. Node-level retry and continue error outputs offer granular control over individual node failures. Advanced patterns like circuit breakers and dead letter queues prevent cascading failures. Integration with tools like Sentry and Datadog enables real-time monitoring and alerts.

Author Credentials

- 📝 Written by: The Nguyen

- ✅ Reviewed by: Automation Expert with extensive n8n implementation experience

- 📅 Last updated: 06 January 2026

Transparency Notice

This article explores n8n error handling based on scientific research and professional analysis. Some links in this article may connect to our products or services. All information presented has been verified and reviewed by our expert team. Our goal is to provide accurate, helpful information to our readers.

N8n & Automation Workflow Resilience

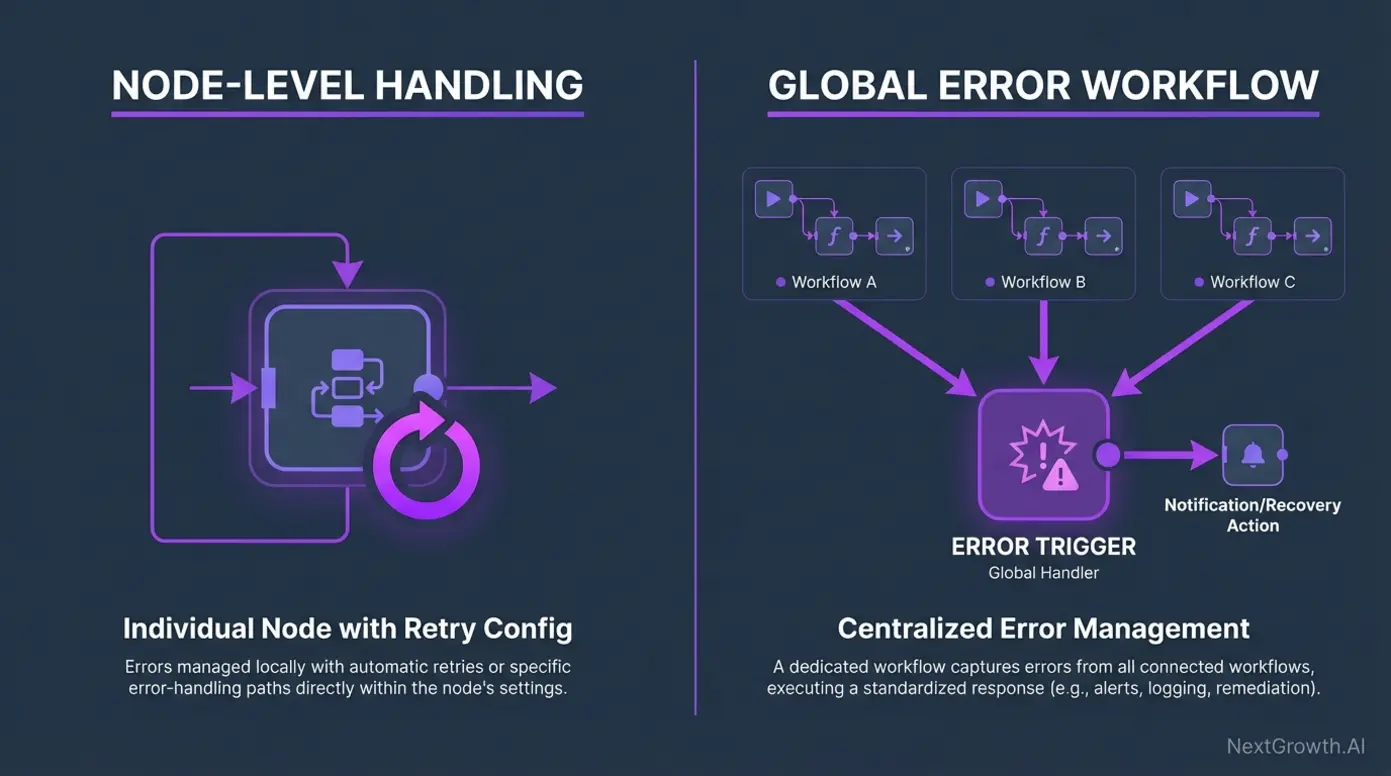

Effective n8n error handling relies on combining node-level settings with global error workflows to ensure no failure goes unnoticed. Node-level configurations like retries and error outputs provide fine control, while global error workflows catch failures anywhere in the automation chain, enabling centralized management.

Understanding Node-Level vs Global Error Handling

Node-level error handling manages failures within individual nodes. You can configure settings such as “Retry on Fail” to automatically retry transient errors or “Continue on Error” outputs to route failed executions to alternative branches. These approaches give granular control but require careful design to avoid masking critical failures.

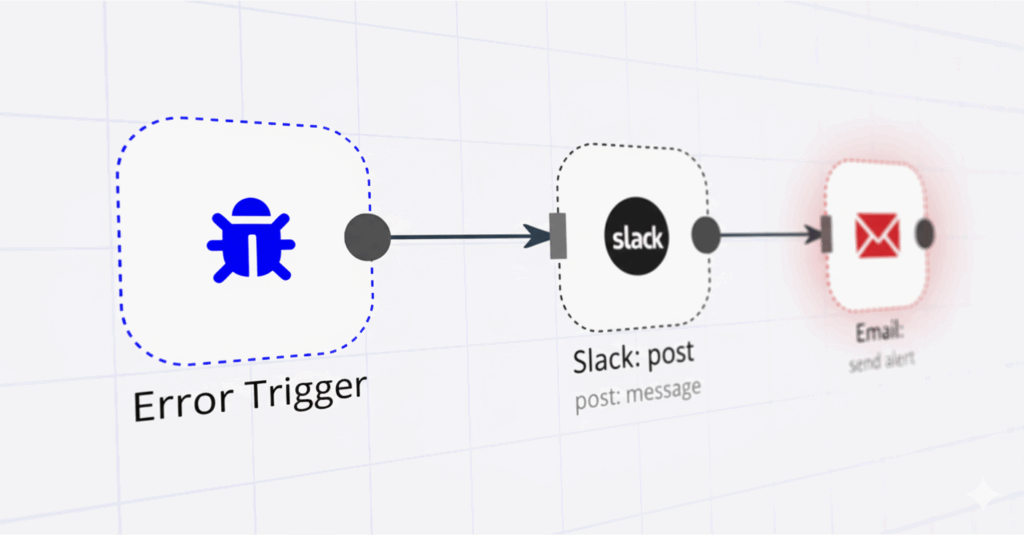

Global error workflows use the special Error Trigger node, allowing the entire workflow system to funnel failures into a dedicated recovery or logging workflow. This centralizes error management, enabling consistent logging, notifications, and recovery strategies across your automation.

Both mechanisms complement each other: node-level handles immediate, local failures, while global workflows manage end-to-end resilience and auditing.

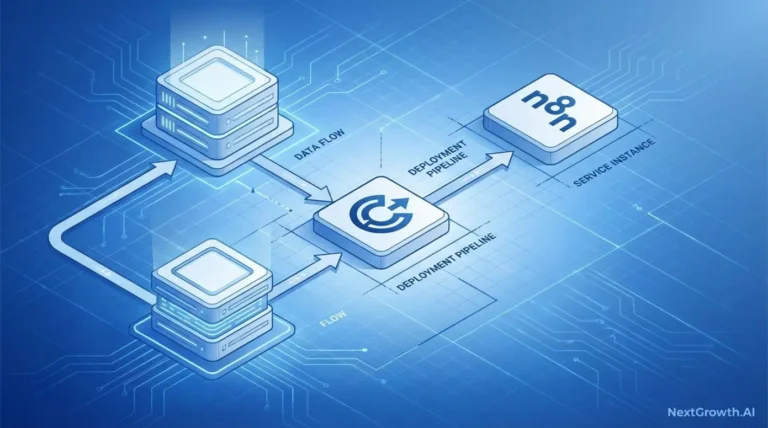

Setting Up the Global Error Workflow Using the Error Trigger Node

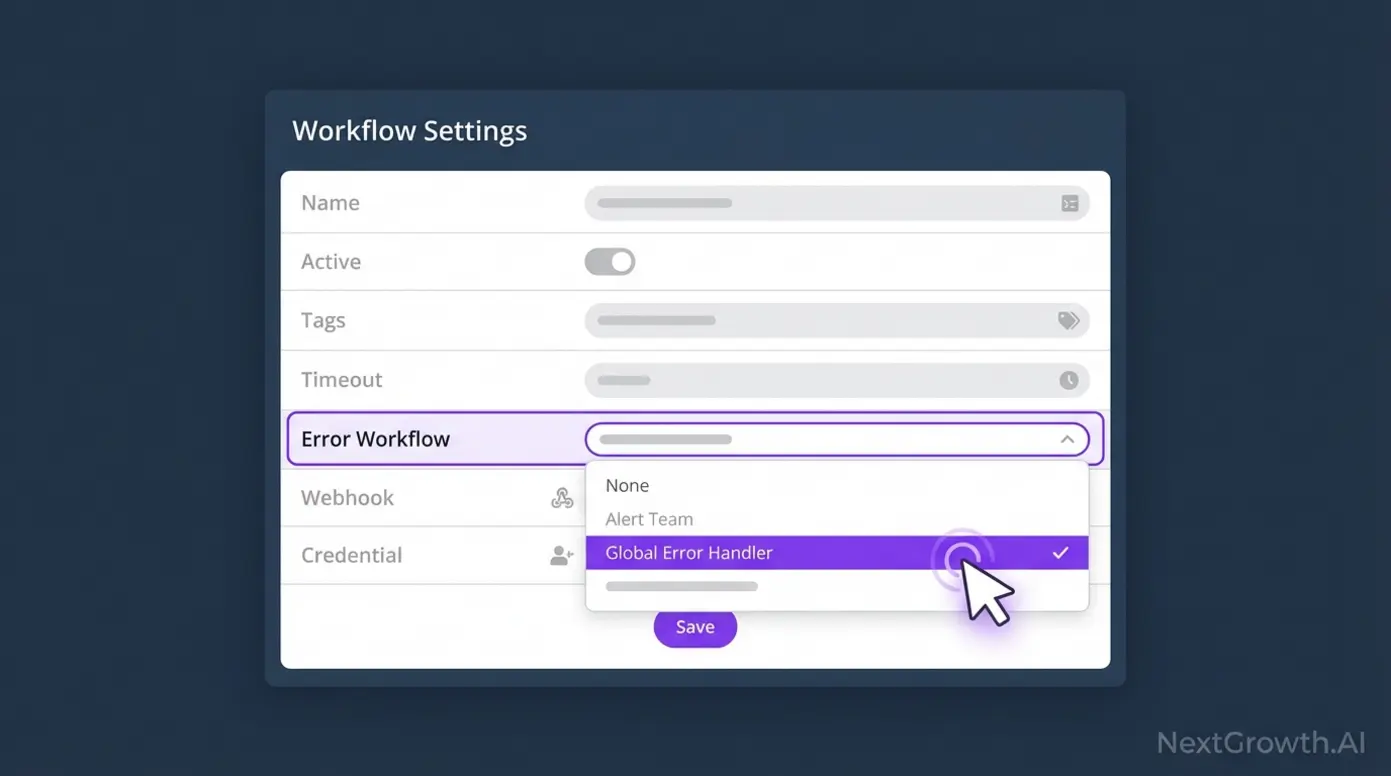

Setting up a Global Error Workflow in n8n begins by creating a dedicated workflow containing the Error Trigger node. This node activates whenever any linked workflow fails, allowing you to react centrally.

Step-by-step setup:

- Create a new workflow and add the Error Trigger node as the starting point.

- Design this workflow to receive error metadata such as workflow name, error message, and node details.

- In your production workflows’ Settings, select this new workflow under the Error Workflow dropdown.

- Customize the error workflow to log errors or send alerts.

Configuring this central workflow lets you collect, analyze, and respond to n8n workflow failures across your environment automatically, checkout this post for more detail: Error Trigger usage in course curriculum

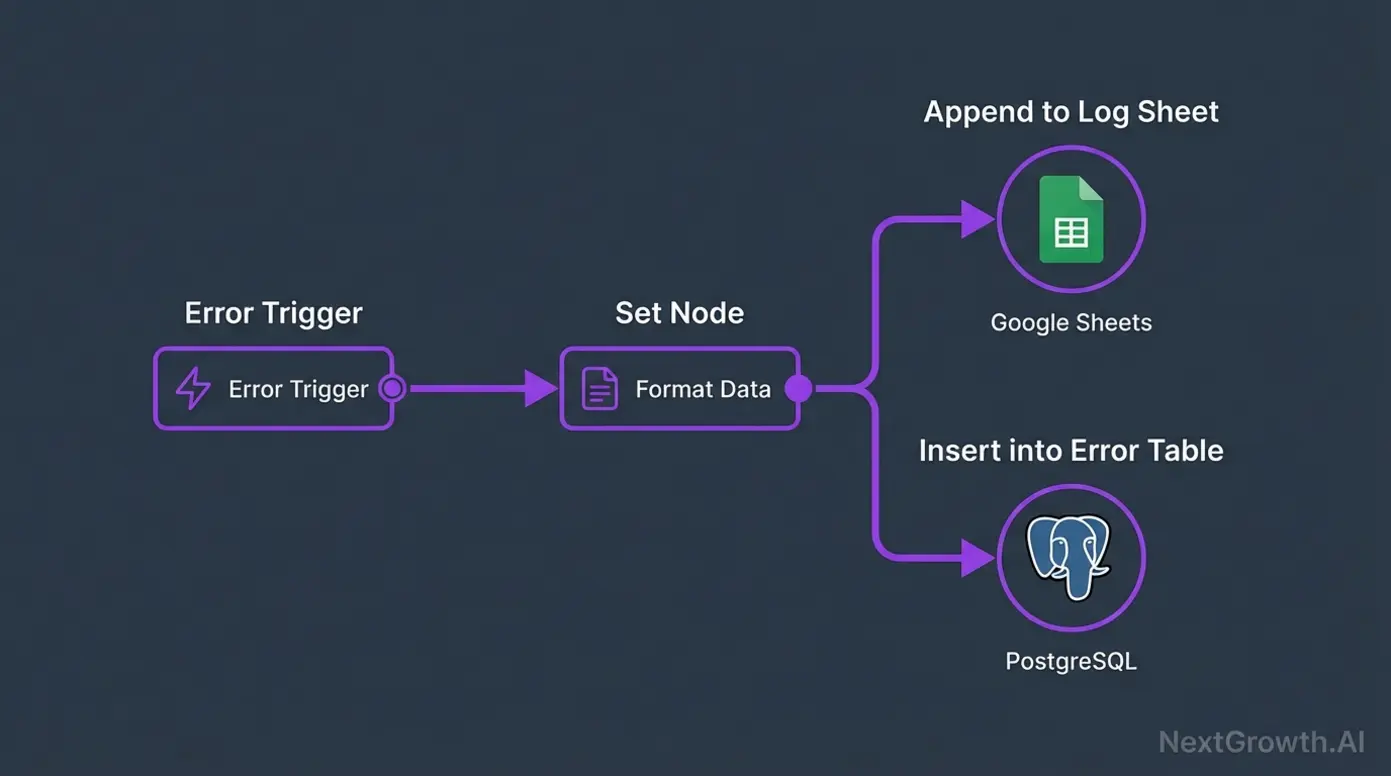

Centralized Logging: Integrate Google Sheets and PostgreSQL

Centralized logging is critical for maintaining visibility into failures beyond alert notifications. Instead of relying on scattered emails or messages, structured error logs stored in Google Sheets or PostgreSQL databases provide audit trails and enable advanced querying.

Structured error logs often include:

- Workflow and node names

- Timestamps

- Detailed error messages

- Execution data snapshots

This approach helps diagnose systemic issues and supports historical analysis.

You can configure Google Sheets or PostgreSQL sinks in your Global Error Workflow by adding respective nodes to insert logs. JSON templates for these insertions facilitate rapid deployment.

Using structured logging aligns with the Mission Control philosophy by centralizing error awareness for proactive automation maintenance.

configure comprehensive n8n error alerts

Building Smart Retry Loops with Exponential Backoff Patterns

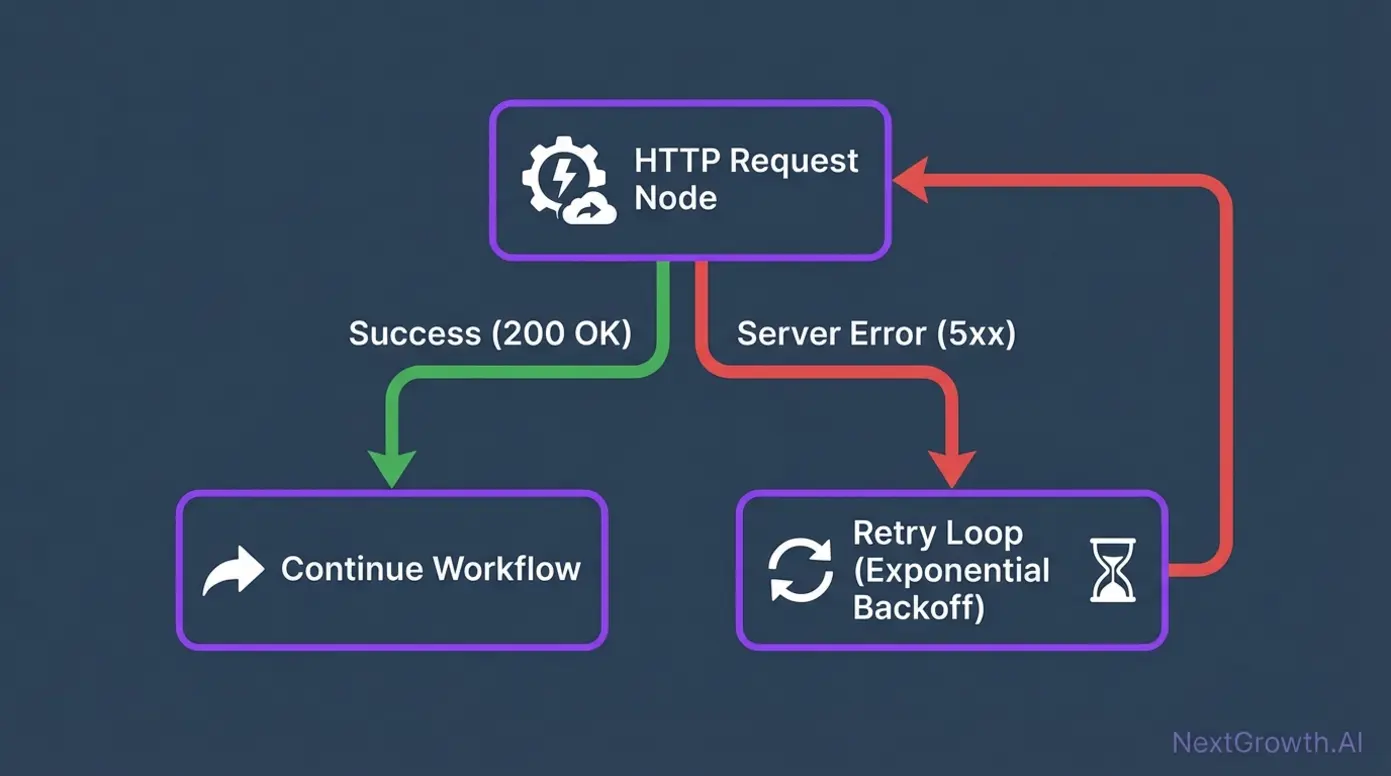

Retry logic with exponential backoff helps handle transient failures gracefully, such as temporary API downtime or rate limiting. Instead of retrying immediately at fixed intervals, exponential backoff progressively increases the wait time between retries.

A typical exponential backoff implementation uses a JavaScript snippet calculating delays like 1s, 2s, 4s, 8s, doubling on each retry attempt.

In n8n, you embed this logic into retry loops by leveraging:

- A counter variable tracking attempts

- Wait node configured with dynamically calculated delays

- Conditional routing to continue retrying or to escalate after maximum attempts

This pattern reduces the risk of overwhelming external services and improves workflow stability.

Integrating External Observability Tools: Sentry, Datadog, Grafana

External observability tools like Sentry, Datadog, and Grafana augment your n8n error handling ecosystem by providing real-time dashboards, alerting, and historical performance data.

You can send enriched error payloads from n8n using HTTP Request nodes configured in your Global Error Workflow. The payload typically contains detailed error context, workflow identifiers, and metadata.

Integrating these platforms enables:

- Faster detection of anomalies

- Incident correlation across multiple systems

- Automated alert routing via Slack, email, or PagerDuty

This proactive monitoring is foundational for enterprise-grade automation reliability.

Web API & Network Integration Stability

Handling errors from various API protocols uniformly improves integration stability. Web API error handling in n8n covers REST, GraphQL, SOAP, and others with consistent patterns supporting retries and graceful degradation.

Standardizing Error Handling Across HTTP Client Nodes

HTTP client nodes in n8n like HTTP Request or GraphQL can fail due to network issues, API errors, or invalid requests. Standardizing error handling ensures these nodes react predictably.

Node-level settings control whether a node should:

- Retry on failure automatically

- Continue execution on error and trigger alternate branches

- Fail the workflow immediately

This flexibility enables handling different error severities appropriately.

Handling API Response Errors: Retries and Graceful Degradation

Errors from APIs, such as 4xx client errors or 5xx server errors, require different treatments:

- Retry transient 5xx errors with exponential backoff.

- Log and ignore non-recoverable 4xx errors to prevent workflow crashes.

- Use fallback operations or default data to maintain flow continuity.

Standardized logic embedded in error-handling branches increases integration robustness.

Node-Level Settings: Retry on Fail vs Continue on Error Behavior

Configuring node settings fine-tunes error tolerance:

- Retry on Fail: Automatically re-executes the node on transient failures up to a defined count.

- Continue on Error: Adds an error output stream allowing alternative workflow paths, helpful for conditional error recovery.

Combining these with global error workflows forms a layered defense against silent failures.

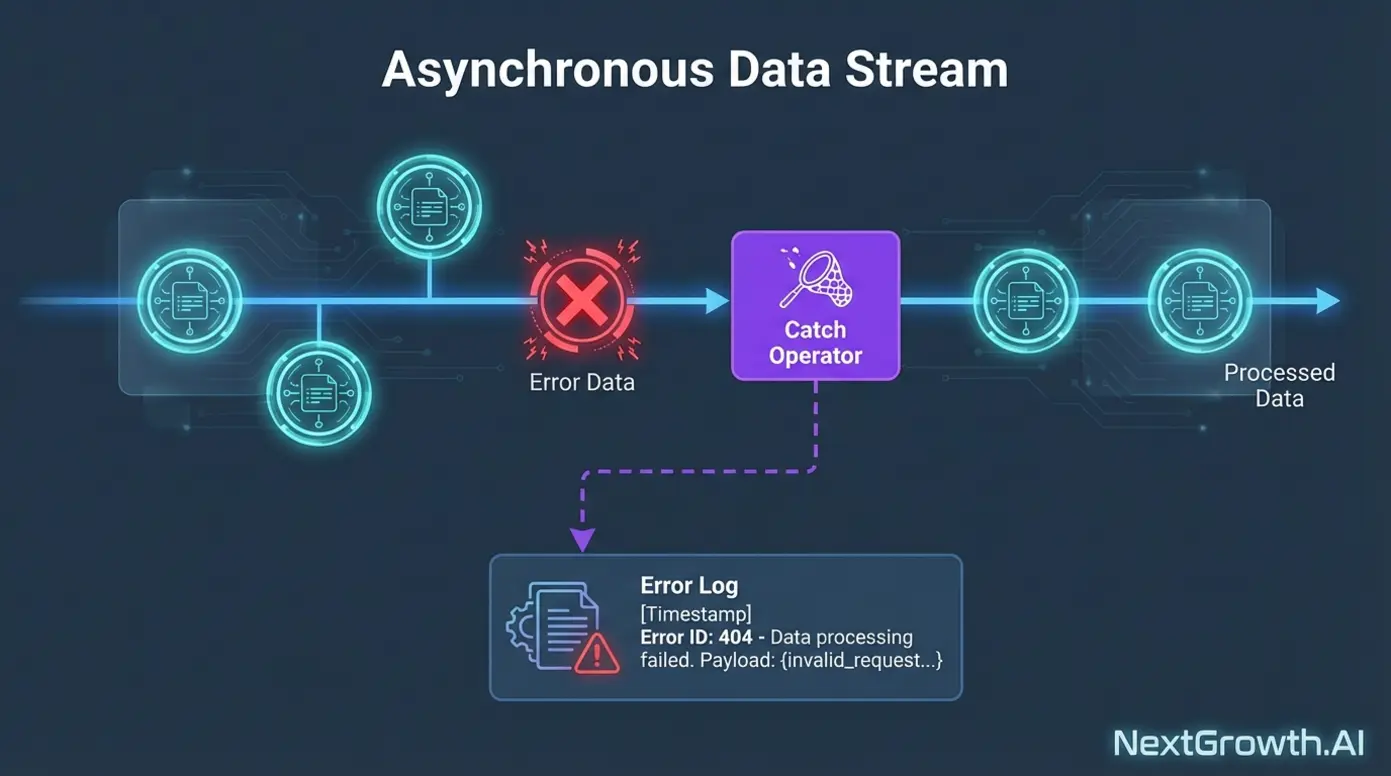

Reactive Programming & Frontend State Management

Errors in asynchronous UI streams and state management can cause frontend disruptions if unhandled properly. Using reactive programming patterns within or alongside n8n can help maintain UI states despite failures.

Managing Errors in Asynchronous UI Streams and State Managers

Frameworks like MobX and RxJava use observable streams to manage UI state reactively. Errors propagated uncaught through these streams can break the UI or cause inconsistent states.

Implementing catch operators intercepts errors in the stream, allowing fallback states or recovery mechanisms without interrupting user interaction.

Catch Operators and State Boundary Protection Best Practices

Apply catch operators close to potential failure points to isolate issues. Use state boundary strategies to reset or compensate for unexpected errors, ensuring the UI remains responsive.

While not native to n8n, integrating frontend observability feedback loops complements backend error handling for holistic resilience.

System-Level Parsing & Data Integrity

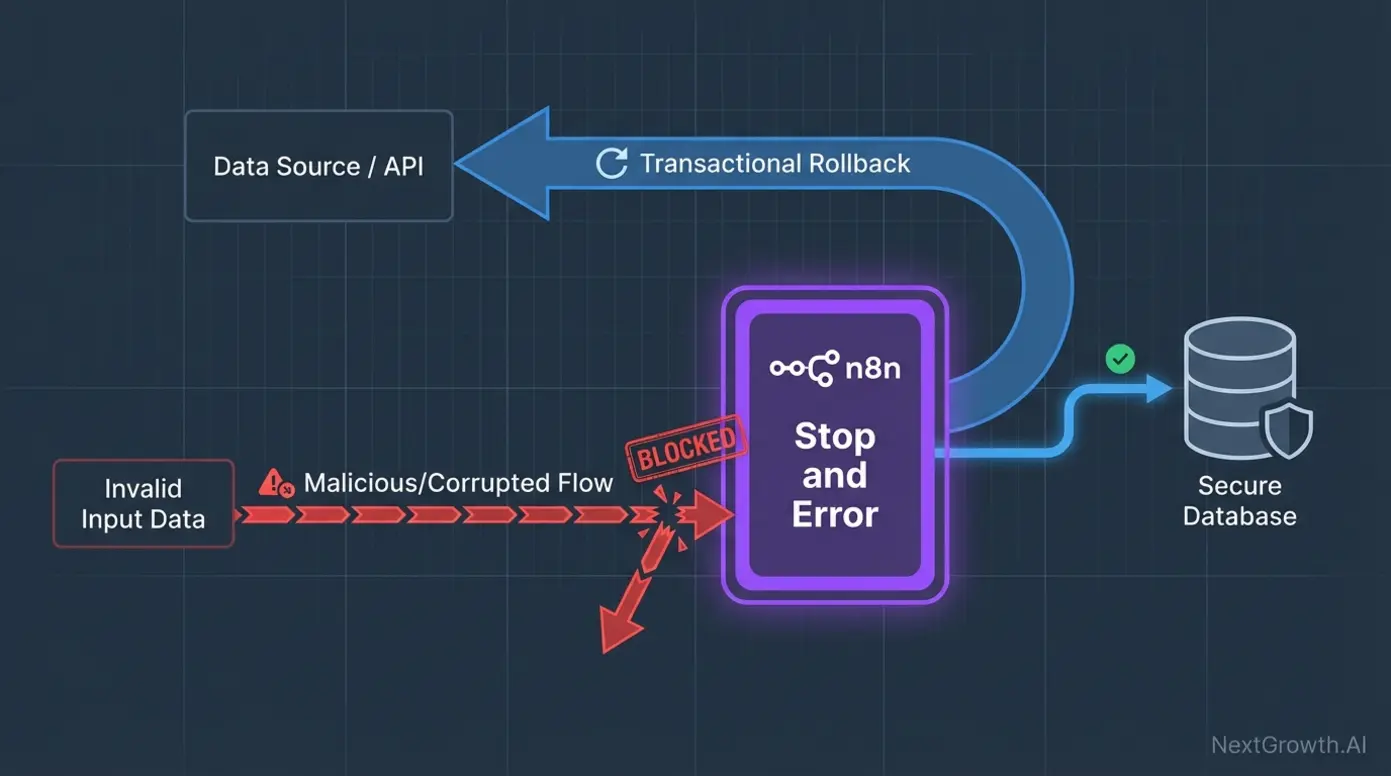

System crashes often stem from unvalidated inputs and data inconsistencies. Enforcing strict validation and transactional logic prevents destructive failures.

Best Practices for Input Validation and Type-Safe Parsing

Input validation through custom functions or nodes ensures workflows process expected data formats, reducing parsing errors. Type-safe parsing avoids system crashes caused by invalid types.

Transactional Rollbacks and Workflow Failures with Stop and Error Nodes

Use Stop and Error nodes in n8n to explicitly fail workflows under critical conditions, triggering rollback logic in connected databases or services. This control maintains data integrity across systems.

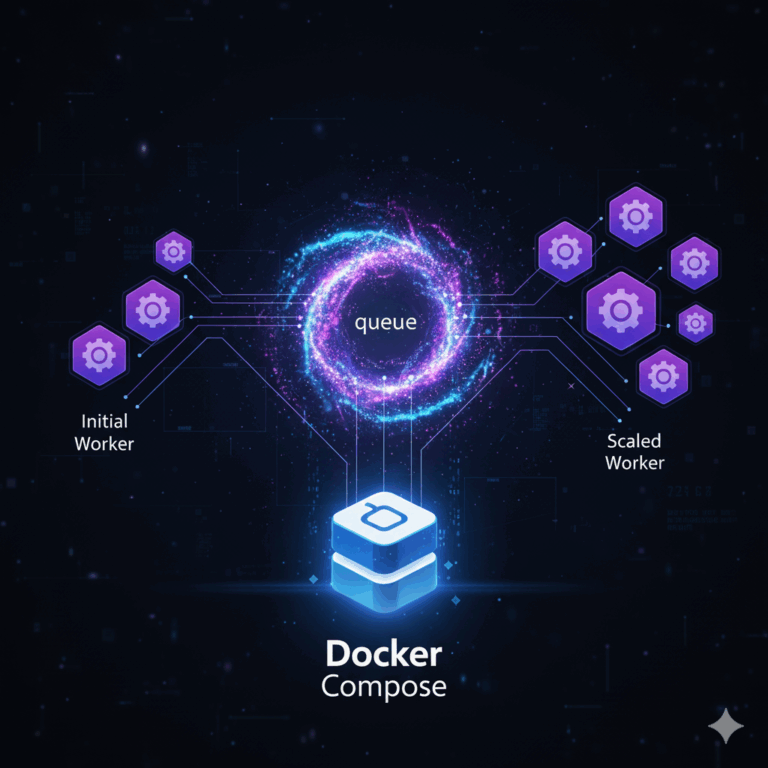

Infrastructure Stability: Preventing Redis Connection Failures and OOM Crashes

Scaling n8n includes managing Redis connections and memory usage to avoid out-of-memory (OOM) crashes. Proper queue management and monitoring prevent infrastructure-level instability.

Smart Limitations & Alternatives for n8n Error Handling

While n8n provides powerful error handling mechanisms, some limitations exist. For example, UI changes across versions can impact Error Workflow dropdown settings, requiring careful updates. Large complex error workflows may become difficult to maintain, increasing cognitive load.

In scenarios demanding high throughput or enterprise scaling, custom development or integrating external monitoring tools might be necessary. Persistent unrecoverable errors or API quota limits could necessitate professional consultation or alternative solutions.

If your automation workflows reach these complexities, consider:

- Consulting workflow automation experts.

- Integrating specialized external systems.

- Using cloud-native event brokers for massive scale.

Acknowledging these limitations empowers you to build robust automation while knowing when to seek advanced support.

Frequently Asked Questions

How to do error handling in n8n?

n8n error handling is conducted via node-level settings like ‘Retry on Fail’ and global workflows using the Error Trigger node. These approaches enable capturing failures from any linked workflow and building custom recovery logic. Create a global error workflow that centralizes error processing and notification. Settings may vary based on workflow complexity and n8n version.

What can be the reasons for node errors in n8n?

Common causes for n8n node errors include invalid input data, expired API credentials, rate limits, and network timeouts. Understanding these helps configure retries and fallback logic effectively. An API token expiration often causes immediate workflow failures requiring reauthentication. Specific reasons depend on nodes and integrations used.

What are best practices for error handling?

Best practices include centralized error workflows, writing errors to persistent logs, and using node retry settings for transient failures. This approach ensures visibility and automated recovery where possible. Use Google Sheets or PostgreSQL for error log databases instead of only notifications. Tailor practices to your environment and error patterns.

How do you perform error handling?

Perform global error handling in n8n by setting up a dedicated workflow with an Error Trigger node linked in workflow settings. This ensures automatic invocation of your error workflow on failures. Configure the ‘Error Workflow’ dropdown in your production workflow. Requires n8n version supporting this functionality.

How to handle errors in Node?

At the individual node level in n8n, you can set ‘Continue Using Error Output’ under node Settings to add an error output stream. This helps build custom logic directly reacting to node failures. Use this output to trigger alternative workflow branches. Use with care to avoid masking issues.

Conclusion

Effective n8n error handling is essential to build resilient and reliable automation workflows that minimize silent failures and reduce manual intervention. Combining node-level retries, global error workflows, and advanced patterns like circuit breakers and dead letter queues equips you to maintain production-grade stability. Integrating external monitoring platforms enhances visibility and accelerates incident response. Begin implementing the Mission Control architecture today using the provided JSON templates and practical strategies to empower your automation’s reliability.