Can SEO Be Automated? The 30/70 Hybrid Guide (2026)

Contents

Can SEO be automated? Yes — but not entirely. The question isn’t whether automation works; it’s which tasks you can safely offload without sacrificing quality or triggering Google’s 2026 spam policies.

Right now, you’re likely spending 20+ hours a week on repetitive SEO tasks: keyword research, technical audits, rank tracking, reporting. Meanwhile, “set-and-forget” automation promises flood your inbox, but you’ve heard the horror stories — sites penalized for scaled content abuse, rankings tanked by thin AI-generated pages.

By the end of this guide, you’ll have a clear decision framework — the 30/70 Hybrid Architecture — that identifies exactly which SEO tasks to automate (30%), which to augment with AI assistance, and which to keep human-led (70%), so you reclaim your time without risking algorithmic penalties. Valid as of Q1 2026, this framework reflects current Google policies and industry consensus on safe automation practices.

We’ll cover feasibility (what can and can’t be automated), the limits and risks (avoiding penalties), and strategic implementation (the 80/20 rule, agentic workflows, and real-world examples).

Key Takeaways

SEO automation follows a 30/70 rule: 30% of tasks (reporting, technical audits, schema markup) can be fully automated with AI agents, while 70% (strategy, content polish, relationship-building) require human expertise. Research from the 2024 McKinsey report on AI adoption shows 65% of organizations now use generative AI in at least one business function, making hybrid workflows the new standard.

- Fully automatable: Rank tracking, broken link monitoring, schema generation, performance reporting

- Agent-assisted: Content outlining, keyword clustering, internal linking suggestions

- Human-only: Strategic planning, brand voice, digital PR, final content review

- Risk mitigation: Google’s 2024 scaled content abuse policy targets bulk AI publishing — use validation loops

SEO Automation Feasibility & Core Concepts

SEO can be automated — approximately 30% of tasks like reporting, technical audits, and schema markup are fully automatable with AI tools and agents. However, 70% of SEO work — strategy, content quality control, and relationship-building — still requires human expertise and judgment, according to 65% of organizations are now regularly using generative AI in at least one business function (McKinsey, 2024). This 30/70 hybrid model is the new standard for efficient SEO workflows in 2026.

The distinction between automatable and human-required tasks isn’t arbitrary. SEO automation, the use of software and AI to handle repetitive search engine optimization tasks, excels at data processing and pattern recognition. These systems can monitor thousands of ranking positions, crawl entire websites for technical errors, and generate structured data markup in seconds — tasks that would take humans days or weeks to complete manually.

However, automation fundamentally lacks the contextual judgment required for strategic decisions. AI agents, autonomous software systems that execute tasks with minimal human intervention, cannot understand your brand’s unique positioning in the market, identify content opportunities that align with business goals, or build authentic relationships with industry influencers. These capabilities require human empathy, intuition, and lived experience.

“Yeah, I’m seeing so much automation in SEO these days. Like, people are automating everything from planning content to link building”

This observation from professional communities validates the automation trend, but also highlights a critical misconception: just because something can be automated doesn’t mean it should be. Google’s scaled content abuse policy, introduced in 2024, specifically targets bulk low-quality content generation, making human oversight more critical than ever.

The 30% automatable category includes specific, well-defined tasks. Rank tracking tools like Semrush Position Tracking and SERanking monitor keyword positions across search engines without human intervention. Broken link monitoring through Screaming Frog and Ahrefs Site Audit identifies 404 errors and redirect chains automatically. Schema markup generators create structured data code based on page content. Technical crawl tools like Sitebulb and DeepCrawl analyze site architecture and identify issues. Performance reporting dashboards via Google Data Studio and Supermetrics compile metrics into visual reports.

Meanwhile, the 70% human-required category encompasses tasks demanding strategic thinking and qualitative judgment. Strategic planning involves deciding which keywords to target based on business objectives, competitive landscape, and resource constraints. Content ideation requires understanding brand voice, audience psychology, and market differentiation. Digital PR and outreach depend on relationship-building, personalized communication, and authentic engagement. Final content review ensures E-E-A-T (Experience, Expertise, Authoritativeness, Trustworthiness), Google’s quality framework that prioritizes content demonstrating first-hand knowledge, is maintained throughout all published materials.

The evolution from simple automation tools to intelligent agents marks a significant shift in SEO workflows. Traditional automation executes pre-defined rules — scheduled reports, API-driven data pulls, scripted actions. Intelligent agentic systems use AI to make decisions, validate outputs, and adapt workflows based on changing conditions. This distinction becomes critical when implementing automation strategies that balance efficiency with quality control.

But before you automate everything in the ‘30%’ bucket, you need to understand the limits and risks that can turn time-saving tools into ranking disasters.

Yes, But With Caveats: The 30/70 Rule

Can SEO be automated? Yes, but only partially. The 30/70 split quantifies exactly which tasks belong in each category based on task complexity and Google’s quality requirements.

The 30% automatable category consists of data-heavy, rule-based tasks that follow consistent patterns. Rank tracking through tools like Semrush Position Tracking and SERanking monitors keyword positions daily without human input. Broken link monitoring via Screaming Frog and Ahrefs Site Audit identifies 404 errors, redirect chains, and orphaned pages automatically. Schema markup generation using Schema.org generators and Merkle’s Schema Markup Generator creates structured data code from page content. Technical crawls through Sitebulb and DeepCrawl analyze site architecture, identify indexing issues, and flag Core Web Vitals problems. Performance reporting dashboards built with Google Data Studio and Supermetrics compile metrics into visual reports delivered on schedule.

These tasks share common characteristics: they process large data volumes, follow established rules, require minimal contextual judgment, and produce objective, measurable outputs. Automation excels precisely because these tasks don’t require understanding business strategy, brand positioning, or user psychology.

The 70% human-required category encompasses tasks demanding strategic thinking, empathy, and brand-specific knowledge. Strategic planning involves deciding which keywords to pursue based on business goals, competitive analysis, and resource availability — decisions that require understanding market dynamics AI cannot comprehend. Content ideation demands knowledge of brand voice, audience pain points, and differentiation strategies that emerge from lived experience. Digital PR and relationship-building depend on authentic communication, trust development, and personalized outreach that automated systems cannot replicate convincingly. Final content review ensures E-E-A-T signals are present, brand guidelines are maintained, and quality standards are met before publication.

According to the Semrush automation guide, automation tools handle data-heavy tasks like crawling and reporting, freeing strategists for higher-value work. This division isn’t just theoretical — it reflects fundamental limitations in what algorithms can and cannot do effectively.

The 30/70 split exists because automation excels at pattern recognition but lacks human judgment for nuance, empathy, and brand alignment. Google’s E-E-A-T guidelines explicitly require demonstrable human expertise, particularly for content where accuracy and trust matter. Automated systems can identify patterns in top-ranking content, but they cannot inject the authentic perspective, first-hand experience, and authoritative voice that Google’s algorithms increasingly reward.

Consider a real-world scenario: A marketing team uses automated tools to generate daily rank reports and weekly technical health scores, consuming roughly 5-10 hours of automation runtime but requiring only 30 minutes of human review time. This frees the SEO strategist to spend 20+ additional hours weekly on content gap analysis, competitive positioning research, and high-value content creation that directly impacts revenue and brand differentiation.

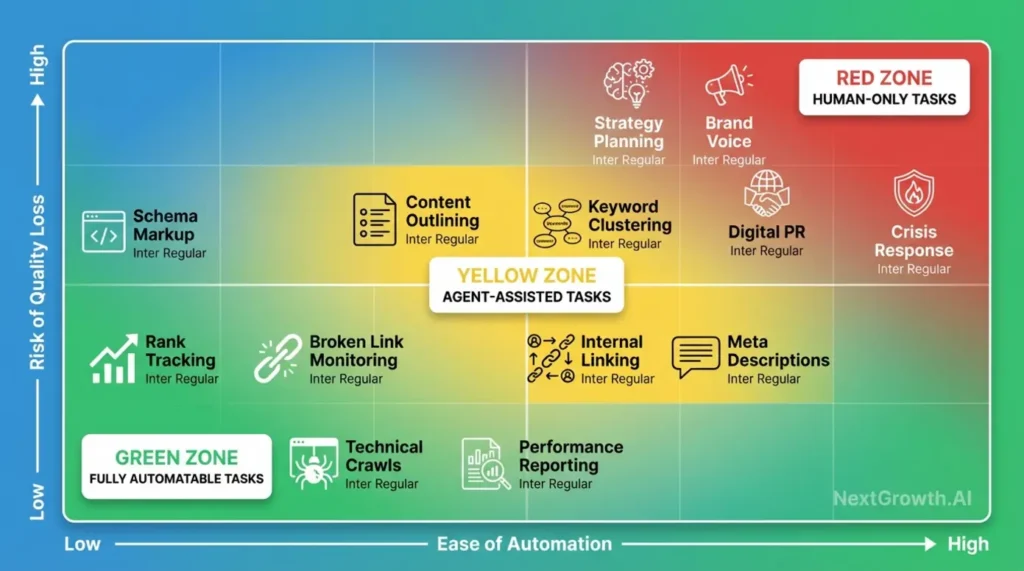

As the Traffic Light Automation Matrix below illustrates, tasks in the Green zone (fully automatable) cluster in the lower-left quadrant — high ease of automation, low risk of quality loss. Yellow zone tasks (agent-assisted) require human validation before implementation. Red zone tasks (human-only) demand full human control to maintain quality and strategic alignment.

Understanding this framework helps you make informed decisions about which automated keyword research workflows to implement versus which tasks to keep under direct human control.

But what exactly do we mean by ‘automation’? The term covers everything from scheduled reports to intelligent AI agents that make autonomous decisions.

What ‘SEO Automation’ Actually Means (Tools vs. Agents)

SEO automation exists on a spectrum from simple scheduled tasks to sophisticated intelligent systems. Understanding this range helps you select appropriate solutions for different workflow components.

Traditional Automation Tools execute pre-defined rules and scheduled tasks without decision-making capability. Zapier workflows pull Google Search Console data into Google Sheets every Monday morning. Python scripts check for 404 errors weekly and email reports to the development team. IFTTT rules trigger Slack alerts when keyword rankings drop by five positions or more. These tools follow “IF-THEN” logic: IF a condition occurs, THEN execute a specific action.

Traditional tools excel at consistency and reliability. They perform the same task repeatedly without variation, reducing human error and freeing time for strategic work. However, they lack adaptability — if conditions change or new scenarios arise, humans must manually update the automation rules.

Agentic AI Systems represent a qualitative leap in automation capability. These autonomous software systems make decisions, validate outputs, and adapt workflows based on changing conditions. An agentic system might generate a content brief, then use a second AI model to audit that brief for keyword stuffing, E-E-A-T compliance, and readability issues before sending it to a writer for final review.

The key difference is decision-making autonomy. Traditional tools execute pre-defined rules without judgment. Agents use intelligence to analyze situations and adapt responses. When rankings drop, a traditional tool sends an alert. An agentic system analyzes why rankings dropped, cross-references competitor content changes, identifies content gaps, and suggests specific content updates or technical fixes — all before human review.

According to IBM’s intelligent automation definition, intelligent automation differs from basic RPA by integrating AI and machine learning to handle complex decision-making (IBM, 2024). This distinction matters because agentic systems can handle ambiguous scenarios that rule-based tools cannot address.

Consider a practical scenario: A traditional tool schedules a weekly technical audit report that lists all 404 errors, slow-loading pages, and missing meta descriptions. An agentic system reviews that same report, identifies high-priority issues based on traffic impact and business value, generates specific fix recommendations with implementation steps, drafts Jira tickets for the development team with appropriate priority labels, and flags issues requiring strategic decisions for human review. The agent handles execution; humans focus on strategic approval.

This evolution enables more sophisticated workflows, but also introduces new challenges. Agentic systems require training data, validation mechanisms, and quality control processes that simple tools don’t need. Understanding when to use SEO APIs for programmatic automation versus when to implement full agentic workflows depends on your team’s technical capability and automation maturity.

A common misconception is that “automation equals AI writing entire articles.” This interpretation misunderstands safe automation in 2026. Google’s scaled content abuse policy doesn’t ban AI-generated content — it penalizes bulk low-quality content produced primarily to manipulate rankings without providing genuine value. Automation should support quality content creation, not replace human expertise and editorial judgment entirely.

So if tools and agents can handle 30% of SEO work, what about the future? Will human SEOs still have jobs in 5 years?

The Future Outlook: Will SEO Exist in 5 Years?

Will SEO be automated completely, rendering human expertise obsolete? The data tells a nuanced story. Standard search volume for “can seo be automated” shows an 80% year-over-year decline, suggesting the novelty of the question is fading. However, AI-predicted search volume for automation-related queries is growing 64% year-over-year. This apparent contradiction reveals that phrasing is maturing while interest in automation solutions continues increasing.

Is SEO dying due to AI? No — SEO is evolving, not dying. The profession is shifting from manual execution to strategic oversight. Human SEOs are becoming “automation architects” who design workflows, establish quality standards, and audit AI outputs rather than manually executing every task.

This transformation mirrors historical shifts in other technical fields. Database administrators once wrote SQL queries manually; now they design schemas and optimize query performance while automation handles routine operations. Similarly, SEO professionals are moving up the value chain from task execution to strategic design and quality assurance.

Google’s position on AI-generated content, as documented in Google’s spam policies, explicitly defines ‘scaled content abuse’ as bulk AI content generation primarily to manipulate rankings, introduced in the March 2024 core update (Google Search Central, 2024). Notably, the policy doesn’t ban AI assistance in content creation — it penalizes low-quality scaled content regardless of how it’s produced. This distinction is critical: automation is viable IF combined with human validation loops and quality control mechanisms.

Forward-looking prediction: By 2027, top-performing SEO teams will operate as three-person hybrid units consisting of one strategist who sets direction and makes high-level decisions, one automation engineer who builds and maintains AI workflows and integration systems, and a suite of AI agents handling 80% of execution tasks including monitoring, reporting, analysis, and initial content drafting.

This model already exists in progressive marketing organizations. User consensus from professional communities confirms the trend toward hybrid workflows, with teams reporting 20-30 hour weekly time savings while maintaining or improving output quality.

The evolution isn’t uniform across all SEO disciplines. Technical SEO is furthest along the automation curve, with sophisticated tools handling site crawls, schema generation, and performance monitoring. Content SEO remains more human-intensive, though AI assistance in outlining, research, and drafting is accelerating. Link building and digital PR remain heavily relationship-based, resisting full automation.

The critical skill for SEO professionals in 2026 and beyond isn’t avoiding automation — it’s knowing how to direct it effectively. Understanding which tasks to automate, which to augment with AI, and which to keep fully human becomes the core competency separating high-performing teams from those struggling with quality control and penalty risks.

Understanding the feasibility is step one. Step two is knowing the limits — where automation fails and what risks you face if you ignore them.

The Limits of Automation: Human vs. AI

SEO tasks requiring empathy, brand-specific judgment, or relationship-building cannot be fully automated without quality loss and penalty risk. Research from BCG’s AI adoption challenges report shows 74% of companies struggle to achieve scalable value from AI initiatives (BCG, 2024), confirming that automation without human oversight often fails. This section identifies the hard limits — where automation breaks down and what safeguards prevent penalties.

The gap between automation hype and automation reality stems from fundamental limitations in what algorithms can accomplish. While AI excels at pattern recognition and data processing, it fundamentally lacks contextual awareness, ethical judgment, and authentic relationship-building capability that certain SEO tasks require.

Human-Only Tasks represent the core strategic and relationship-based work that defines competitive differentiation. These five categories cannot be safely automated without risking quality degradation or brand damage:

Strategy Formulation requires understanding business objectives, competitive dynamics, and market positioning that AI cannot comprehend. Deciding which market segment to target with content involves analyzing profit margins, customer lifetime value, competitive intensity, and internal resource constraints. Planning a content calendar around product launches or seasonal trends demands knowledge of sales cycles, inventory availability, and promotional strategies. Prioritizing keyword opportunities based on business goals rather than pure search volume requires judgment about which rankings drive revenue versus vanity metrics.

Empathy-Driven Content demands lived experience and authentic perspective. Google’s E-E-A-T guidelines specifically prioritize first-hand experience and demonstrable expertise. Content like “What It’s Like to Manage a Remote Team” requires genuine experience managing distributed teams, not just research about best practices. Understanding emotional triggers for different audience segments emerges from human psychology and market immersion, not algorithmic analysis. Crafting brand narratives that resonate requires cultural awareness and storytelling skill that AI cannot replicate convincingly.

Relationship-Based Digital PR depends on trust, authenticity, and reciprocity. Personalized outreach to journalists requires understanding their beat, recent coverage, and content preferences — then crafting pitches that provide genuine value rather than generic templates. Building long-term partnerships with industry influencers involves consistent engagement, mutual support, and authentic relationship development over months or years. Negotiating guest post placements requires understanding editorial guidelines, audience expectations, and value exchange in ways automated systems cannot navigate effectively.

Crisis Management and Reputation Response require rapid judgment under pressure. When negative press emerges or a PR crisis develops, automated responses risk tone-deaf messaging that escalates problems. Human experts must assess legal implications, craft appropriate responses, coordinate with stakeholders, and manage communications with empathy and strategic foresight.

Content Requiring First-Hand Expertise especially in technical, medical, legal, or financial topics, must demonstrate verifiable expertise. According to StraightNorth’s automation limits guide, strategy, empathy-driven content, and relationship-building require human judgment and cannot be fully automated (StraightNorth, 2025). E-E-A-T requirements mean authors must have credentials, professional experience, and demonstrable authority that automated content cannot fake.

These limitations aren’t temporary technological constraints that future AI will overcome. They represent fundamental differences between pattern matching and genuine understanding, between simulated empathy and authentic human connection. Successful automation strategies acknowledge these limits and design workflows accordingly, using strategic competitor analysis frameworks to identify where human insight creates competitive advantage.

Knowing what not to automate is critical, but the bigger risk is believing automation can run unsupervised.

Tasks That Require Human Judgment

Which jobs cannot be automated in SEO? Five specific categories demand human expertise and cannot be delegated to AI systems without significant quality and strategic risks.

Strategy Formulation encompasses all high-level decision-making about SEO direction and priorities. Examples include deciding which market segment to target with content based on business profitability analysis, prioritizing keyword opportunities according to business goals rather than just search volume or difficulty scores, and planning content calendars around product launches, seasonal trends, or competitive events. These decisions require understanding business objectives, competitive positioning, and market dynamics that exist outside the data AI can access. Automation can inform these decisions by providing data and analysis, but cannot make the final strategic call.

Empathy-Driven Content requires lived experience and authentic perspective that AI cannot fabricate. Examples include writing content about personal experiences like “What It’s Like to Manage a Remote Team” or “My Journey from Burnout to Work-Life Balance,” understanding emotional triggers for different audience segments based on psychological insight and market research, and crafting brand narratives that resonate with specific customer values and aspirations. Google’s E-E-A-T guidelines explicitly prioritize first-hand experience and demonstrable expertise, making authentic human authorship mandatory for content where experience signals trust and authority.

Relationship-Based Digital PR depends on trust development, authentic communication, and long-term reciprocity. Examples include personalized outreach to journalists that references their recent work and beat coverage, building long-term partnerships with industry influencers through consistent engagement and mutual value creation, and negotiating guest post placements by understanding editorial standards and audience expectations. These activities require human social intelligence, cultural awareness, and relationship management skills that automated systems cannot convincingly replicate. Template-based outreach fails precisely because recipients recognize the lack of authentic personalization.

Additional human-only tasks include crisis response and reputation management when negative press or customer complaints require empathetic, nuanced communication; ethical judgment calls about whether controversial topics align with brand values and risk tolerance; and interpreting ambiguous user intent when search queries have multiple possible meanings requiring business context to resolve.

The common thread: these tasks require contextual judgment, authentic human connection, or strategic business knowledge that exists outside the data sets AI models can access. Attempting to automate them produces generic, unconvincing, or strategically misaligned outputs that damage rather than enhance SEO performance.

Knowing what not to automate is critical, but the bigger risk is believing automation can run unsupervised.

The ‘Set-and-Forget’ Myth and Penalty Risks

The “set-and-forget” promise represents one of the most dangerous misconceptions in SEO automation. Many tools market themselves as “fully autonomous” or “zero-touch” solutions that run indefinitely without human intervention. The reality: effective automation requires ongoing monitoring, calibration, and human validation to prevent quality degradation and algorithmic penalties.

The “Set-and-Forget” Promise appeals to overwhelmed marketers seeking to eliminate the manual grind of SEO task execution. However, real-world failures demonstrate why autonomous automation backfires. Sites publishing 100 AI-generated product reviews in one week without human review receive manual actions for thin content. Automated internal linking scripts creating 1000+ low-value links in a single day get flagged for manipulation. AI-generated blog posts lacking author attribution or expertise signals lose rankings in algorithm updates targeting low-quality content.

Google’s Scaled Content Abuse Policy (2024-2026) specifically targets content produced at scale — automated or human — primarily to manipulate rankings without adding value. According to Google’s spam policies documentation, scaled content abuse includes automated generation of large volumes of low-value content to manipulate rankings (Google Search Central, 2024). Key indicators Google’s systems watch for include repetitive phrasing patterns across multiple pages, lack of original research or first-hand insights, formulaic content structure suggesting template-based generation, and missing E-E-A-T signals like author expertise or cited sources.

The policy doesn’t ban automation or AI assistance — it penalizes bulk low-quality content regardless of production method. This distinction matters: automation combined with rigorous quality control and human validation passes scrutiny; automated bulk publishing without review triggers penalties.

Real Penalty Scenarios illustrate specific failure modes. A site publishes 100 AI-generated product reviews in one week with no human review, resulting in a manual action for thin content because reviews lack specific product testing details and personal experience signals. An automated internal linking script creates 1000+ contextually irrelevant links in 24 hours, flagged for manipulation because the link velocity and relevance patterns match known spam signatures. AI-generated blog posts published without author attribution or expertise signals lose 60% of organic traffic in the May 2024 core update because they lack E-E-A-T indicators Google’s algorithms increasingly prioritize.

Why Validation Loops Are Mandatory becomes clear from these examples. Human review before publication, validation that automated outputs meet quality standards, and strategic oversight preventing bulk publishing without value addition serve as insurance against penalties and quality degradation.

Agentic validation workflows provide a middle ground between full manual review and risky autonomous publishing. The concept: AI generates content → validation agent checks for keyword density, E-E-A-T elements, factual accuracy, and readability → human strategist reviews flagged issues and approves or rejects → writer executes approved brief. This three-layer validation catches most automation failures before publication while still saving significant time compared to fully manual processes.

Research shows automation drift — gradual quality degradation over time — occurs when systems run without calibration. AI models trained on historical data become less accurate as conditions change. Automated processes optimized for past algorithm states fail when Google updates ranking factors. Human oversight prevents these drift scenarios by detecting early warning signs and adjusting automation parameters before problems escalate.

To avoid these penalties, use this actionable safety checklist before automating any SEO task.

2026 Automation Safety Checklist

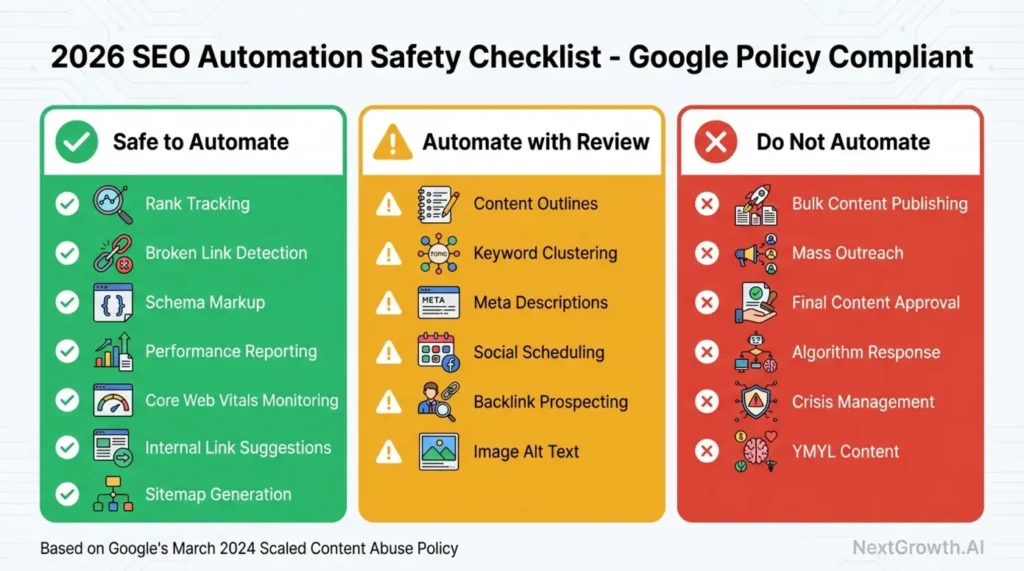

This checklist cross-references common automation tactics against Google’s current spam policies and quality guidelines, categorizing tasks into three risk levels: Green (Safe to Automate), Yellow (Automate with Human Review), and Red (Do Not Automate).

- Green (Safe to Automate) — These tasks can run autonomously with minimal human oversight:

- Rank tracking and position monitoring

- Broken link detection and 404 error alerts

- Schema markup generation from page content

- Performance reporting and dashboard updates

- Core Web Vitals monitoring and alerts

- Internal linking suggestions based on semantic analysis (contextually relevant, not bulk spam)

- XML sitemap generation and submission

- Robots.txt validation and crawl directive monitoring

- Yellow (Automate with Human Review Required) — These tasks benefit from automation but require human validation before implementation:

- Content outline generation (requires review for brand voice and strategic alignment)

- Keyword clustering and topic mapping (requires validation of strategic priorities)

- Meta description drafting (requires review for brand voice and uniqueness)

- Social media post scheduling (requires review for tone and timeliness)

- Backlink prospecting lists (requires validation of relevance and quality before outreach)

- Image alt text suggestions (requires review for accuracy and keyword appropriateness)

- Content gap analysis reports (requires strategic interpretation before action)

- Red (Do Not Automate) — These tasks require full human control:

- Bulk content publishing without individual review (violates scaled content abuse policy — quality and E-E-A-T signals require human verification)

- Mass outreach emails without personalization (damages relationships and brand reputation — authentic communication requires human touch)

- Final content approval and publication (E-E-A-T requires demonstrable human expertise and editorial judgment)

- Strategic pivots in response to algorithm updates (requires business context and competitive analysis humans must interpret)

- Crisis communication and reputation management (requires empathy, legal awareness, and strategic judgment)

- Content on YMYL topics without expert review (health, finance, legal content requires verifiable expertise)

According to Google’s March 2024 core update announcement, Google strengthened enforcement against automated content methods lacking original value in its March 2024 update (Google, 2024). This checklist reflects those current standards, but remains subject to change as Google’s policies evolve.

Important Note: This checklist is valid as of Q1 2026. Always cross-reference with the latest Google Search Central documentation and industry updates before implementing new automation strategies. When in doubt, err toward Yellow (human review) rather than Green (full automation).

With the safety guardrails in place, you’re ready to implement automation strategically. Here’s how to apply the 80/20 rule and build efficient workflows.

Strategic Implementation: The 80/20 Rule & Workflow

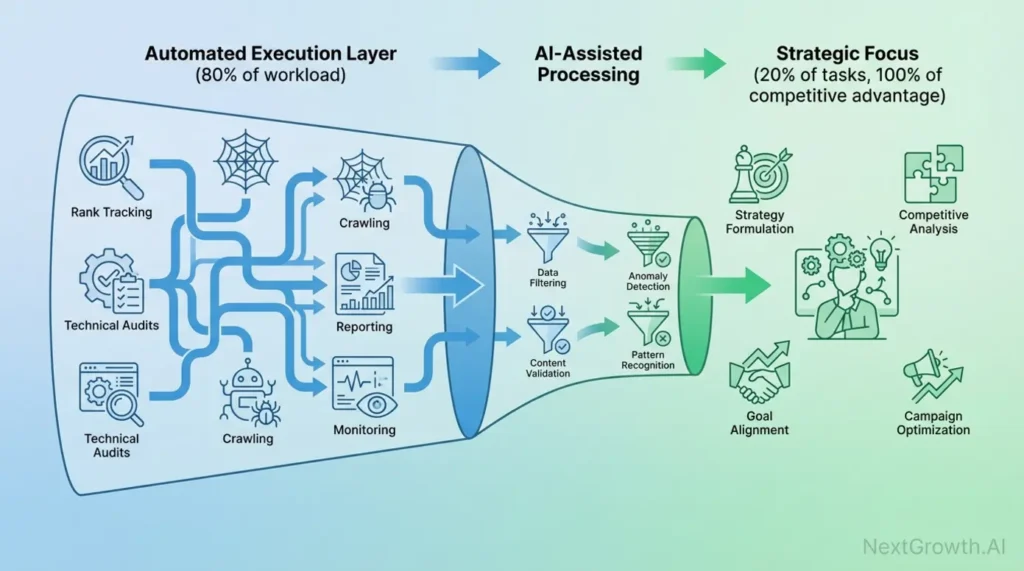

The 80/20 rule for SEO automation means using tools to handle 80% of repetitive data tasks so you can focus 100% of your strategic energy on the 20% of work that drives competitive advantage. This section maps automation opportunities to the 4 pillars of SEO and provides step-by-step examples you can implement today. By the end, you’ll have a replicable workflow for safe, high-impact automation.

The Pareto Principle states that 80% of results come from 20% of efforts. Applied to SEO, this means 80% of ranking improvement comes from 20% of optimization work — specifically, the strategic decisions about which keywords to target, what content gaps to exploit, and how to differentiate from competitors. Conversely, 80% of SEO execution involves repetitive data tasks that consume time but don’t directly drive strategic advantage.

Automation’s role: handle the 80% of execution grunt work — data collection, rank monitoring, technical audits, performance reporting — to free human time and energy for the 20% of strategic thinking that creates competitive differentiation. According to the Marketing AI Institute’s 2024 report, 67% of marketers cite lack of education and training as the primary barrier to AI adoption (Marketing AI Institute, 2024), suggesting the opportunity exists for teams who invest in automation literacy.

Consider a typical 40-hour SEO workweek. Approximately 32 hours might involve execution tasks: running technical audits, tracking rank changes, prospecting backlink opportunities, generating reports, monitoring competitor movements, and updating meta descriptions. Only 8 hours go to strategic work: deciding which keywords merit investment, planning content calendar priorities, analyzing competitive positioning, and determining resource allocation.

Automation can reduce the 32 hours of execution to 5-10 hours of oversight and quality control, freeing 22-27 hours weekly for strategic work. This shift transforms SEO from reactive task execution to proactive strategic planning. The strategic 20% is where competitive differentiation happens — competitors can use the same automation tools, but your unique strategy creates moats that tools alone cannot replicate.

Automation handles 80% of SEO workload (data collection, reporting, monitoring) so strategists can focus 100% of their energy on the 20% that drives results (content strategy, competitive positioning, brand building).

Implementing this philosophy requires mapping specific automation opportunities to established SEO frameworks, understanding which tools handle which tasks, and building workflows that balance efficiency with quality control.

The 80/20 Rule Applied to SEO Automation

What is the 80/20 rule for SEO? It means automating 80% of low-value repetitive tasks to free up 100% of human time for the 20% of high-value strategic work that drives competitive advantage.

The Pareto Principle, developed by economist Vilfredo Pareto, observes that roughly 80% of effects come from 20% of causes. In SEO automation context: 80% of results — ranking improvements, traffic growth, conversion optimization — come from 20% of efforts, specifically the strategic decisions about market positioning, content differentiation, and resource allocation. Conversely, 80% of time spent on SEO involves lower-value execution tasks that support strategy but don’t directly create competitive advantage.

Applied practically: A 40-hour SEO workweek typically includes 32 hours of execution tasks like technical audits, rank tracking, backlink monitoring, report compilation, and tactical optimizations. Only 8 hours focus on strategic work like deciding which keywords align with business goals, planning content that addresses unique customer pain points, analyzing competitive moves, and determining where to invest resources for maximum return.

Automation compresses the 32 hours of execution to 5-10 hours of oversight and quality control. This frees 22-27 hours for strategic work — nearly tripling the time available for high-impact decision-making. The efficiency gain isn’t just about saving time; it’s about reallocating human cognitive resources from repetitive tasks to creative problem-solving.

Why this matters for competitive differentiation: Competitors can purchase the same automation tools, access the same rank tracking data, and run identical technical audits. The execution playing field levels when everyone automates effectively. Strategy becomes the sustainable competitive advantage — which keywords you prioritize based on business margins, which content angles you take based on customer research, how you position against competitors based on market analysis. These strategic choices, uniquely informed by your business context, create moats that automation tools alone cannot replicate.

The 80/20 framework also applies within automation itself. Not all automatable tasks deliver equal value. Focus first on automating the highest-volume, lowest-judgment tasks: rank tracking (runs daily, requires zero judgment), broken link monitoring (weekly, minimal judgment about priority), performance reporting (scheduled, pure data compilation). These quick wins deliver immediate time savings with minimal setup complexity.

The 80/20 rule gives you the philosophy. Now let’s map specific tools to the 4 pillars of SEO for tactical implementation.

Mapping Automation to the 4 Pillars of SEO

What are the 4 pillars of SEO? They provide a framework for categorizing automation opportunities: Technical SEO (site infrastructure), On-Page SEO (content optimization), Content (creation and quality), and Off-Page SEO (authority and links).

The 4 pillars organize SEO work into distinct categories, each with different automation opportunities and constraints. Understanding which tasks in each pillar can be automated helps you build comprehensive workflows while maintaining quality standards.

| Pillar | Automatable Tasks | Automation Level | Tools/Methods |

|---|---|---|---|

| Technical SEO | Site crawls, schema markup, Core Web Vitals monitoring, broken link detection, redirect validation, XML sitemap generation, robots.txt monitoring | Green (Fully Automatable) | Screaming Frog, Sitebulb, PageSpeed Insights API, Schema.org generators |

| On-Page SEO | Internal link suggestions, keyword density monitoring, header tag optimization alerts, image compression, meta tag templates | Yellow (Review Required) | Semantic analysis tools, SEO plugins, image optimization APIs |

| Content | Keyword clustering, content gap analysis, outline generation, readability scoring, competitive content analysis | Yellow (Review Required) | AI content tools, clustering algorithms, gap analysis platforms |

| Off-Page SEO | Backlink monitoring, broken link prospecting, brand mention tracking, competitor backlink analysis, link opportunity identification | Yellow (Review Required) | Ahrefs API, Moz API, Mention.com, prospecting tools |

Technical SEO offers the highest automation potential because tasks are rule-based and objective. Automated site crawls via Screaming Frog or Sitebulb identify technical issues like broken links, redirect chains, duplicate content, and indexing problems without human judgment. Core Web Vitals monitoring through PageSpeed Insights API tracks performance metrics and alerts when scores decline. Schema markup generation creates structured data code from page content following established Schema.org standards. XML sitemap generation and robots.txt validation ensure search engines can crawl and index content efficiently.

These tasks are Green (fully automatable) because they follow consistent rules, produce objective outputs, and require minimal contextual judgment. Human oversight remains valuable for prioritizing fixes based on business impact, but the detection and reporting processes run autonomously.

On-Page SEO tasks benefit from automation but require human review to maintain quality and strategic alignment. Internal link suggestions based on semantic analysis can identify topically related pages, but humans must verify the links provide user value and don’t create over-optimization patterns. Meta description generation using AI can draft options quickly, but brand voice and uniqueness require human review. Image alt text suggestions can describe visual content, but accuracy and keyword appropriateness need validation.

These are Yellow (automate with review) because they involve brand-specific judgment about tone, messaging, and strategic priorities that algorithms cannot fully capture.

Content automation focuses on research and structural support rather than full content generation. Keyword clustering groups related search terms by topic, enabling comprehensive content planning. Content gap analysis identifies topics competitors cover that you don’t, revealing opportunity areas. Outline generation suggests H2/H3 structure based on top-ranking content patterns. Readability scoring flags overly complex paragraphs or jargon-heavy sections.

All content automation remains Yellow because quality content requires human expertise, first-hand experience, and strategic judgment about which topics deserve investment. Automation supports research and structure; humans provide insight and voice.

Off-Page SEO automation tracks link opportunities and brand mentions but cannot replace relationship-building. Backlink monitoring tracks new and lost links automatically. Broken link prospecting identifies 404 pages on competitor sites where your content could replace dead links. Brand mention tracking alerts when your company gets mentioned without links. Competitor backlink analysis reveals where competitors acquire authority.

These tasks are Yellow because identifying opportunities is automatable, but outreach requires personalized communication and relationship management that humans must handle to be effective.

Traditional automation tools handle these tasks independently. But what if your automation system could audit itself before you see the output?

Agentic SEO Workflows: AI Auditing AI

Agentic workflows represent a significant evolution beyond single-step automation. Instead of AI generates output → human reviews, agentic systems introduce validation layers: AI generates → AI audits → AI revises → human approves. This multi-agent approach catches errors before human review, reducing review time from 30 minutes to 5 minutes per piece while maintaining quality standards.

Agentic workflows are automation systems where multiple AI agents perform different specialized roles before final human approval. One agent generates output, another audits that output against quality standards, a third synthesizes feedback and produces revised versions, all before a human strategist sees the final result. This differs fundamentally from single-step automation because it adds pre-review validation that catches common AI failures automatically.

Example agentic workflow in detail:

Prerequisites: Access to AI models (OpenAI GPT-4, Claude, or similar), automation platform (n8n, Make.com, or LangGraph for Python), quality criteria definitions (keyword density limits, E-E-A-T requirements, readability targets), human approval workflow (Slack, email, or project management system).

Step 1: Set up Agent 1 (Content Brief Generator) to analyze the top 10 SERP results for your target keyword. The agent extracts common H2/H3 heading patterns, identifies content gaps where top results lack coverage, generates a 2000-word outline with keyword placement recommendations, and includes PAA questions from search results.

Step 2: Configure Agent 2 (Quality Auditor) to review the brief against Google’s Helpful Content guidelines. The agent checks for keyword stuffing by calculating density and flagging if it exceeds 2%, verifies E-E-A-T elements by confirming the brief includes expert attribution suggestions and first-hand perspective directives, scores readability using Flesch-Kincaid formulas, and identifies missing FAQ content by comparing against PAA data.

Step 3: Deploy Agent 3 (Synthesizer) to combine Agent 1’s output with Agent 2’s audit findings. The agent auto-revises the brief to fix flagged issues such as reducing keyword density if excessive, adding E-E-A-T directives if missing, simplifying complex sections flagged for readability, and incorporating FAQ questions identified as gaps.

Step 4: Route to Human Strategist for final approval. The strategist reviews the synthesized brief in 5 minutes instead of creating one manually in 30 minutes, approves with specific editorial notes if needed, or requests targeted changes to strategic positioning, then sends the approved brief to the writer.

Result: Content briefs produced in 5 minutes of human time instead of 30 minutes of manual creation, with 70-80% of common quality issues caught automatically before human review.

Why this matters: Single-layer automation (AI writes, human catches all errors) still requires humans to identify and fix AI mistakes, which consumes significant review time. Agentic validation catches most errors automatically, transforming human review from error-hunting to strategic approval. The efficiency compounds when producing multiple briefs weekly.

According to Search Engine Journal’s scaled content analysis, industry analysis warns that bulk AI content without validation loops poses penalty risks (Search Engine Journal, 2024). Agentic validation directly addresses this risk by building quality control into the automation workflow itself.

Tools to build agentic workflows: n8n provides no-code automation with AI integrations and conditional logic for agent routing. LangGraph offers a Python framework specifically designed for multi-agent systems with state management. Make.com serves as a Zapier alternative with native AI tool connections and complex workflow support.

Another advanced tactic that competitors aren’t covering: automating internal linking based on semantic relationships rather than simple keyword matching.

Automating Semantic Internal Linking

Traditional internal linking relies on manual judgment and keyword matching — “This article mentions keyword X, so I’ll link to another article about X.” This approach misses non-obvious topical relationships and becomes time-prohibitive at scale. Semantic internal linking using vector embeddings solves both problems.

The Problem: Manual internal linking is subjective, time-consuming, and inconsistent. Writers link based on obvious keyword overlaps, missing deeper topical connections. Large content libraries (100+ articles) make comprehensive linking impossible to manage manually. The result: missed authority distribution opportunities and inconsistent user navigation paths.

The Solution: Semantic internal linking automates link discovery using vector embeddings — mathematical representations of content meaning that enable algorithmic similarity measurement.

Process:

- Convert content to vectors: Use OpenAI Embeddings API or similar service to convert each published article’s full text into a vector representation. These vectors encode semantic meaning — topically similar content produces similar vector patterns even when using different keywords.

- Calculate similarity scores: Compute cosine similarity between all article vector pairs. This produces a similarity matrix showing how closely related each article is to every other article on a semantic level, regardless of exact keyword overlap.

- Identify related content: For each article, extract the 5-10 most semantically similar articles based on highest similarity scores. High similarity indicates strong topical relationship that may warrant linking.

- Generate contextual suggestions: Use AI to analyze the content of both articles and suggest specific anchor text and placement. Example output: “Article A about [keyword X] is semantically similar to Article B about [keyword Y]. Suggest linking from paragraph 3 of Article A (where [concept Z] is discussed) to Article B with anchor text ‘[relevant phrase about concept Z]’.”

- Human review and approval: SEO strategist reviews suggestions to ensure links provide genuine user value, align with brand context, and don’t create over-optimization patterns. Approve or reject each suggestion individually.

Benefits:

Discovers non-obvious relationships: Vector similarity identifies topical connections humans might miss. An article about “email marketing ROI” might be semantically similar to one about “customer lifetime value calculation” even though they don’t share obvious keywords. The semantic connection (both discuss measurement and metrics) creates a valuable user navigation path.

Scales across large libraries: Calculate similarity for 1000+ article pairs in minutes rather than months of manual review. The computational cost is low; the time savings are significant.

Maintains topical authority: Google values sites with strong semantic internal linking because it demonstrates comprehensive topic coverage and logical content organization. Automated semantic linking builds this authority systematically rather than opportunistically.

Tools needed: OpenAI Embeddings API (or alternatives like Cohere, Anthropic) for vector generation, Pinecone or similar vector database for storing and querying vectors at scale, n8n or Python script for workflow automation and orchestration, and Google Sheets or Airtable for human review interface presenting suggestions for approval.

This is an advanced tactic best suited for teams ready to scale beyond basic automation. Start with smaller content sets (20-50 articles) to validate the process and refine quality thresholds before expanding to full site coverage. The topical authority strategies framework provides additional context for understanding how semantic linking supports broader authority-building efforts.

Theory is useful, but let’s walk through a concrete example you can replicate today: automating 404 error monitoring.

Example Workflow: Automating 404 Monitoring

404 monitoring is one of the most repetitive tasks in technical SEO — checking for broken pages, identifying which ones had traffic or backlinks, prioritizing fixes based on impact, and tracking resolution status. This workflow reduces that process from 2 hours weekly to 15 minutes of focused review time.

Prerequisites:

- Screaming Frog SEO Spider (desktop tool or CLI version for automation)

- Google Search Console account with API access

- n8n.io free tier account OR Python 3.8+ with requests and pandas libraries

- Slack workspace for alerts (or email alternative)

- Basic technical knowledge for API setup

Step 1: Set Up Weekly Site Crawl

Configure Screaming Frog to crawl your entire site every Monday at 2:00 AM using scheduled task automation. Export all URLs with 404 status codes to CSV format. Alternative: Use Screaming Frog CLI via cron job on a server, or trigger crawls via n8n HTTP Request node if using the cloud version. Save the CSV output to a consistent location (Google Drive, Dropbox, or local directory).

Step 2: Pull GSC Data for Cross-Reference

Use Google Search Console API to pull “Crawl Errors” data showing which 404 URLs Google discovered. Cross-reference this with your Screaming Frog export to identify 404 URLs that have external backlinks pointing to them (high priority) or historically had organic traffic in the last 6 months (medium priority). Use Python script or n8n Google Sheets integration to merge datasets.

Step 3: Prioritize Fixes Using Impact Scoring

Apply priority scoring logic: High priority = 404 with 5+ backlinks OR 100+ monthly sessions in last 6 months. Medium priority = 404 with 1-4 backlinks OR 10-99 sessions in last 6 months. Low priority = 404 with no backlinks and no historical traffic. Sort the list by priority score to surface highest-impact issues first.

Step 4: Auto-Generate Alerts for Action

Send automated Slack message to #seo-fixes channel every Monday morning at 8:00 AM with high-priority 404s listed. Include URL, backlink count, historical traffic data, and suggested action (redirect to relevant page or restore content). Alternatively, auto-create Jira tickets with the same information for team tracking.

Step 5: Human Review and Implement Fixes

SEO manager reviews alert every Monday morning (15 minutes), decides fix strategy for each high-priority URL: implement 301 redirect to most relevant existing page, restore content if it had value and aligns with current strategy, or let 404 stand if the page had low value and no strategic importance. Track fixes in project management system.

Result: 404 monitoring reduced from 2 hours per week of manual GSC review and cross-referencing to 15 minutes per week of prioritized fix review. Time savings: ~90 minutes weekly, or 78 hours annually.

Important note: This workflow is a template. Adapt crawl frequency (daily for large sites with frequent updates, monthly for smaller stable sites), priority thresholds (adjust backlink counts and traffic minimums based on your site size), and alert channels (email, project management tools, or dashboard instead of Slack) to match your team’s specific needs and resources.

Automation is powerful, but it’s not a universal solution. Here’s when you should avoid it entirely.

When Manual Processes Still Win

Automation delivers significant efficiency gains for data-heavy, rule-based tasks. However, specific situations exist where manual SEO processes remain superior to automated alternatives. Understanding these boundaries prevents quality degradation and strategic misalignment.

Crisis Management & Reputation Response demands human judgment under pressure. When your brand faces negative press, customer complaints going viral, or PR crises developing in real-time, automated responses are tone-deaf and actively harmful. Crisis communication requires empathy, legal awareness, stakeholder coordination, and rapid strategic decision-making that AI systems cannot provide. Human experts must assess reputational risk, craft appropriate messaging, coordinate with legal and executive teams, and manage communications with sensitivity to context and consequences.

Content Requiring Deep Expertise especially on YMYL topics (Your Money Your Life — health, finance, legal, safety) cannot be automated without risking misinformation and penalties. E-E-A-T-critical content must demonstrate verifiable expertise through author credentials, professional experience, and authoritative sourcing. Medical advice requires licensed healthcare professionals. Financial planning needs certified financial advisors. Legal guidance demands qualified attorneys. Technical how-tos for high-stakes industries must come from practitioners with proven track records. Automation tools should assist with research and outlining, but human experts with verifiable credentials must author, review, and sign off on final drafts.

Strategic Pivots during algorithm updates or market shifts require human analysis and rapid adaptation. When Google releases core updates like the March 2024 Helpful Content update, automated systems cannot quickly interpret new ranking factors, analyze SERP changes, or adjust content strategy in real-time. Human strategists must study ranking pattern shifts, reinterpret what quality signals matter most, and pivot content approaches based on competitive intelligence and search behavior changes that algorithms cannot yet process contextually.

“Set-and-Forget” Automation Strategies Triggering Spam Filters represent a critical risk highlighted in Google’s enforcement patterns. Automation requires monitoring loops, not full autonomy. Systems that publish content at scale without human review, generate bulk backlinks without relationship context, or implement technical changes without validation quickly trigger spam detection. Automation tools should assist human judgment, not replace it entirely.

When to seek professional guidance: If your content touches YMYL topics (health, finance, legal), consult subject matter experts with verifiable credentials before publishing, regardless of automation level. For high-stakes content where accuracy matters for user safety or financial decisions, use automation for research and outlining but require human experts to write, review, and approve final versions before publication.

Alternative approaches for edge cases: Hybrid workflows work best — use automation for time-consuming research, data analysis, and structural scaffolding, then require human expertise for strategic interpretation, quality assurance, and final approval. This balances efficiency with quality control and risk mitigation.

The goal isn’t avoiding automation entirely but understanding where human judgment creates value that algorithms cannot replicate. Strategic tasks requiring business context, relationship-based activities demanding authentic communication, and content requiring demonstrable expertise all remain firmly in the human domain despite advancing AI capabilities.

Now that you understand feasibility, limits, and implementation strategies, here are the most common questions SEOs ask about automation.

Frequently Asked Questions

Can I automate SEO?

Yes, SEO can be automated — approximately 30% of tasks like rank tracking, technical audits, reporting, and schema markup are fully automatable using AI tools and agents. However, 70% of SEO work — including strategy, content quality control, and relationship-building — still requires human expertise. The most effective approach combines automated execution with human strategic oversight to maximize efficiency without sacrificing quality or risking penalties.

Which jobs cannot be automated?

In SEO, strategy formulation, high-quality content writing requiring lived experience, and relationship-based digital PR cannot be fully automated. These tasks require human empathy, intuition, and authentic communication to build trust and meet Google’s E-E-A-T standards. Automated systems lack the contextual judgment needed for strategic planning, brand voice consistency, and personalized outreach that drives long-term results.

What is the 80/20 rule for SEO?

The 80/20 rule for SEO automation means using tools to handle 80% of the workload — specifically repetitive data tasks like crawling, monitoring, and reporting — so you can focus 100% of your strategic energy on the 20% of tasks that drive high-impact results. This includes creative strategy, content quality, and competitive positioning. By automating execution-heavy tasks, SEO teams reclaim 20+ hours per week for work that creates competitive differentiation.

Can SEO be done by AI?

AI can significantly assist with SEO by automating research, drafting content outlines, and analyzing data. However, it is not a complete replacement for human SEOs. Relying solely on AI without human review can lead to generic content, factual errors, and a lack of brand voice. The most effective approach uses AI for execution speed and data processing, while humans provide strategic direction, quality control, and E-E-A-T signals.

Is SEO dying due to AI?

No, SEO is not dying; it is evolving. While AI changes how users search and how content is produced, the need for optimization to ensure visibility remains critical. SEO is shifting toward a hybrid model where AI handles data-heavy execution and humans handle strategy, quality, and relationship-building. Google’s policies (as of 2024-2026) don’t ban AI content — they penalize low-quality scaled content, making human oversight more important than ever.

Conclusion

For efficiency-seeking marketers and business owners, SEO can be automated using the 30/70 hybrid architecture — 30% of tasks (reporting, technical audits, schema markup) are fully automatable, while 70% (strategy, content quality, relationships) require human judgment. Research shows 65% of organizations now use generative AI, yet 74% struggle to scale AI value, proving that automation without human oversight fails. The best approach combines AI agents for execution, human strategists for direction, and validation loops to prevent penalties.

This hybrid model isn’t about replacing SEOs — it’s about reclaiming 20+ hours per week from repetitive tasks so you can focus on competitive strategy, brand differentiation, and high-value content that drives results. Automation is the execution layer; strategy is your competitive moat.

Next Step: Map your current SEO workload to the Traffic Light Automation Matrix from this guide. Identify Green tasks (rank tracking, broken link monitoring) to automate this quarter, Yellow tasks (content outlining, meta description generation) to augment with AI plus human review, and Red tasks (strategy, digital PR) to keep human-led. Start small — automate one repetitive task this week and measure the time saved.